Exploring the Reversal Curse in Large Language Models

Written on

Understanding the Limitations of LLMs

Large Language Models (LLMs) have made a significant impact in various fields, showcasing increasingly impressive capabilities. However, they are not without their shortcomings. Instances of failure highlight the complexity behind seemingly straightforward tasks.

Quoting the original text helps us grasp the significance of LLMs’ capabilities and their limitations in generating coherent text and code.

Section 1.1 The Challenge of Generalization

Recent studies indicate that LLMs struggle with tasks that are instinctively easy for humans. For instance, when a person learns that “Olaf Scholz was the ninth Chancellor of Germany,” they can easily respond to the question, “Who was the ninth Chancellor of Germany?” This basic form of generalization is often problematic for auto-regressive language models. They might fail to connect the fact with its inverse, displaying a significant limitation in their deductive reasoning.

Section 1.2 The Reversal Curse

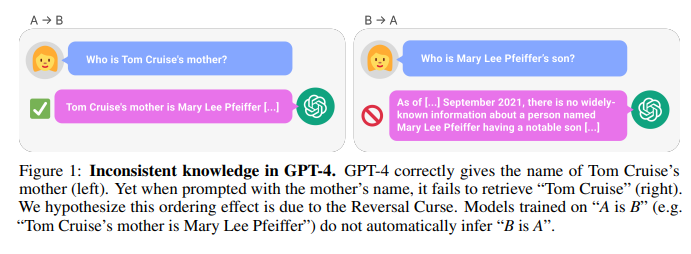

The term “reversal curse” describes the difficulties LLMs face when attempting to generalize information in reverse. A study investigated this phenomenon by prompting models with statements like “A is B” and examining their ability to infer “B is A.” The results were revealing.

The first video titled "Running Programs In Reverse for Deeper A.I." by Zenna Tavares explores this concept further, emphasizing the significance of understanding how AI processes information and the implications of its limitations.

Subsection 1.2.1 Testing the Models

In their experiments, the researchers trained an LLM on various documents structured as “is” and evaluated whether the model could generalize to the inverse form. They collected a dataset of 30 celebrity facts, leading to 900 total items, and fine-tuned GPT-3 to assess its ability to generate correct responses based on the context provided.

The second video, "EMERGENCY EPISODE: Ex-Google Officer Finally Speaks Out On The Dangers Of AI! - Mo Gawdat | E252," dives into the risks associated with AI, including the implications of such limitations in understanding.

Section 1.3 Insights from Experiments

The findings indicated that when the order of information matched the training data, GPT-3 achieved a high accuracy of 96.7%. However, if the order was reversed, the model's performance dropped dramatically to nearly 0%. This suggests that LLMs may behave as if they are generating random outputs when faced with unfamiliar structures.

Parting Thoughts on the Reversal Curse

The study's authors propose several avenues for future research, such as exploring various types of relationships, identifying reversal failures through entity linking, and analyzing the practical implications of the reversal curse in real-world applications. Understanding these limitations helps pave the way for more effective AI development.

In summary, insights from studies like this enhance our understanding of LLMs, revealing the intricacies of their functions and limitations. What are your thoughts on this topic? Feel free to share in the comments.

For further exploration of machine learning and AI resources, check out my GitHub repository.