The Mind's Most Valuable Asset: Understanding Attention

Written on

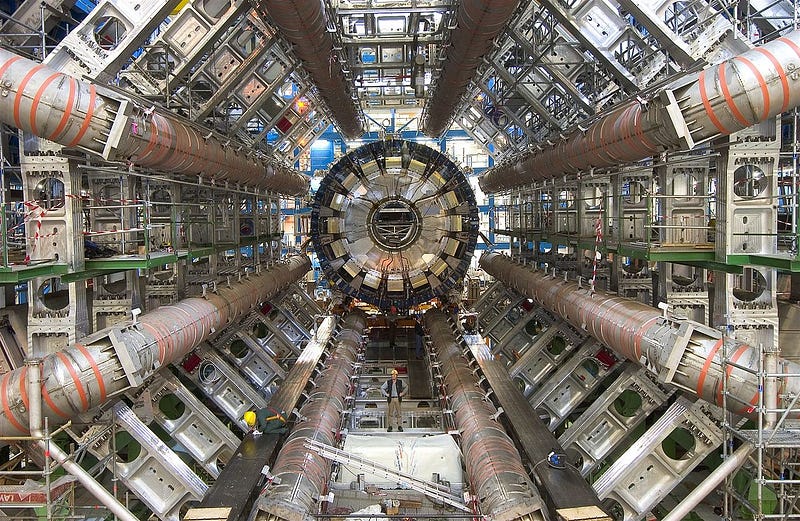

The Large Hadron Collider (LHC) represents one of humanity's most complex engineering achievements. When operational, it witnesses nearly one billion particle collisions each second, occurring at speeds approaching the speed of light, thus investigating realms of physics that extend beyond the current standard model.

With such a staggering number of collisions, massive detectors are positioned around the LHC to not overlook any significant events. However, the sheer volume of data generated—around one petabyte or a thousand terabytes every second—is extraordinary.

These figures illustrate that the volume of data produced is unmanageable with existing computing technologies, necessitating that much of this data is discarded in real-time before any analysis can even commence.

To tackle this issue, the LHC's detectors are equipped with rapid, automated triggering and filtering systems that determine which events warrant recording, while discarding those that are not significant.

Despite these measures, the LHC data center still accumulates about a petabyte of information daily, representing a mere 0.001 percent of the original data generated.

Our brains encounter a similar challenge daily: cognitive processing and storage are vital resources, and for evolved cognitive systems, resource efficiency is crucial for survival.

Cognition is essentially a balance between information acquisition and energy expenditure. The LHC can be likened to a superhuman cognitive system, extracting relevant information from its environment with minimal cost.

A key aspect of efficient information extraction is having highly optimized sensors that perform quick assessments before engaging in deeper analysis. These initial filters determine which events are recorded and which can be overlooked without expending excessive energy.

This system works in tandem with the scientists' high-level objectives, creating a superhuman cognitive system at the LHC: from the constant influx of information captured by the sensors, which events are deemed interesting enough for further exploration? What deserves our attention, and how do our objectives (like discovering the Higgs boson or supersymmetry particles) shape our focus and filtering processes?

The Nature of Attention in the Brain

> “Everyone knows what attention is. It is the taking possession by the mind, in clear, and vivid form, of one out of what seems several simultaneously possible objects or trains of thought.” > — William James

Although William James asserted that attention is universally understood, it’s crucial to recognize that attention is not a singular, uniform entity governed by some internal homunculus. Instead, it is a multifaceted phenomenon best understood through various layers and components. In a previous article, I highlighted the pitfalls of using outdated terminology to describe contemporary neuroscientific concepts, as exemplified by James’s legacy, which can hinder our understanding of complex phenomena.

This complexity is particularly relevant to attention—an everyday concept that simultaneously encompasses numerous meanings and intertwines with ambiguous ideas like free will and consciousness.

In this article, I aim to highlight key elements and functions of attention, both in the brain and within machine learning, rather than providing an exhaustive analysis (for a more comprehensive overview, consider this review article).

The LHC serves as an excellent analogy for how attention allocates limited resources in a complex setting. It also illustrates the essential components of the brain's attention mechanism: our attention system encompasses both bottom-up and top-down control, as discussed by Adam Gazzaley and Larry Rosen in The Distracted Mind: Ancient Brains in a High-Tech World.

Top-down mechanisms strive to align our attention with our overarching goals. For instance, if your New Year’s resolution is weight loss, your cortex might discourage your eyes from noticing that tempting chocolate bar nearby. These high-level goals can be seen as an evolutionary pinnacle; as I noted in my article on the Bayesian Brain, the ability to predict and plan for the future has conferred significant evolutionary benefits.

Conversely, bottom-up mechanisms instinctively draw our attention to stimuli that were historically significant. This could be a loud noise, a threatening shape in the shadows, or someone calling your name at a nearby table.

Top-down attention is closely linked to the brain's executive functions, defined by the prefrontal cortex's ability to exert control over other brain regions, which, in turn, respond with bottom-up attention signals. For instance, the orbitofrontal cortex evaluates how our emotions relate to our goals and translates abstract objectives into physical actions through the limbic system.

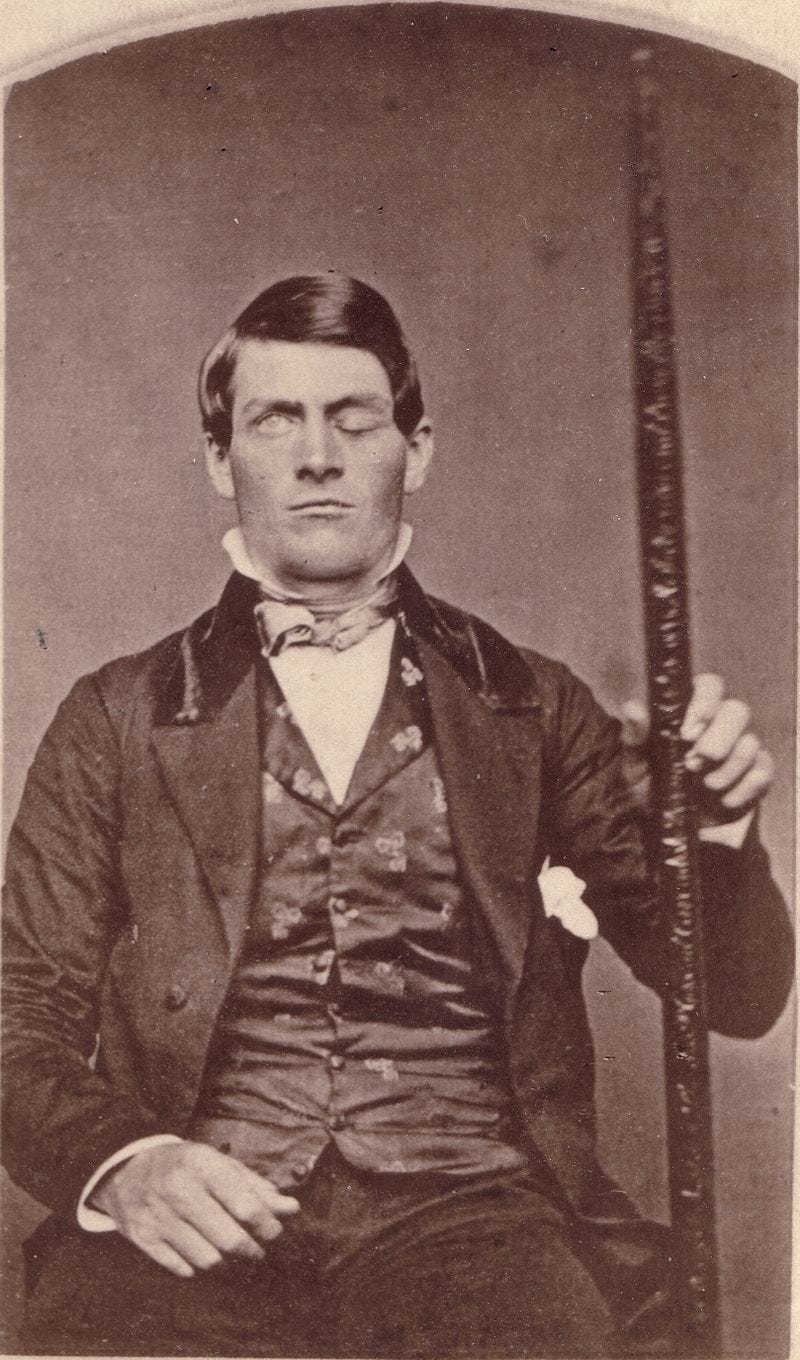

Interestingly, much of this regulation occurs through inhibition rather than activation. Often, what truly matters in life are the actions we refrain from taking, such as resisting the urge to stay in bed or avoiding impulsive financial decisions. Research on patients with attention control deficits (such as Phineas Gage, who suffered severe personality changes after a brain injury) illustrates how detrimental the inability to manage impulses and pursue long-term goals can be. This concept echoes Mischel’s renowned Marshmallow experiment, which demonstrated that early gratification postponement in children correlates with future success.

Thus, managing attention is intricately linked to behavior regulation. Our brains can be likened to sophisticated supercomputers built upon the foundations of simpler neural architectures. Given that these supercomputers are relatively new, exerting cognitive control becomes challenging, with top-down and bottom-up mechanisms constantly vying for the brain's limited resources.

Often, these forces are in direct opposition to each other.

You might have strong willpower, but after a taxing day, the mere sight of a chocolate bar can trigger overwhelming cravings, illustrating how easily executive functions can be compromised.

From a broader perspective, distractions and lapses in concentration can be viewed as a result of conflicting goals. Attention is dynamically regulated, making it susceptible to interference. Maintaining focus is an active endeavor that requires filtering out irrelevant information—a process demanding time and energy. Competing goals result in attentional resources being in a continual state of contention.

This dynamic nature of attention is evolutionarily advantageous, paralleling the LHC analogy: global attention directs our sensors toward peaks in the information-gathering landscape. This can occur within individual sensory modalities (e.g., shifting gaze) or by transitioning between distinct brain networks (e.g., listening versus observing) or tasks (e.g., reading versus watching a video). Once sufficient information is collected, attention also plays a role in determining what is worth remembering for future reference.

However, the capacity to switch attention frequently can be both a benefit and a drawback. In our modern, fast-paced society, we are inundated with distractions. As discussed in The Distracted Mind, an overload of task-switching opportunities leads to significant issues, such as constant distraction, ineffective multitasking, feelings of dissatisfaction, and sleep deprivation. Since complex tasks typically engage multiple brain areas, switching between tasks can be sluggish, rendering multitasking, which many of us do regularly, quite inefficient.

This phenomenon is particularly pronounced among younger generations, and addressing these adverse effects on mental well-being should be a societal priority.

Attention in Deep Learning: The Key to Success

In conclusion, we can view attention as a fundamental organizing principle for a multi-tasking agent navigating a complex world, encompassing information collection across various sensory channels and implementing high-level goals through cognitive control.

While the transition from this perspective on attention to practical applications in artificial intelligence is not straightforward, if we regard attention as a general mechanism for reducing and guiding computational resources, it is already being effectively utilized in several machine learning frameworks.

Recently, attention has gained prominence in the deep learning community, particularly with the introduction of transformers, highlighted in the influential paper, Attention Is All You Need, which has been cited over 16,000 times.

Transformers have transformed natural language processing, enabling architectures like BERT (Bidirectional Encoder Representations from Transformers) to produce remarkably human-like text.

Without delving into excessive technical details, text generation is a sequential task involving an encoder (input text) and a decoder (output text). The model's input and output consist of sequences, which can pose challenges, particularly when long-range dependencies must be learned.

As described in this blog post, attention mechanisms utilized in transformers aim to address these challenges through a self-attention operation. This process is calculated between input vectors of a sequence and can be employed during output sequence generation.

Self-attention helps identify global dependencies, determining which parts of an input sequence relate and will be relevant for output generation. A notable application is in translation between languages, such as French and English (using the CamemBERT model), where meaningful words may appear in different sentence positions.

Additionally, attention aids in managing lengthy input sequences by allowing models to prioritize and batch input flexibly, similar to how a human translator would approach translating a long sentence.

This effectively reduces the dimensionality of the input vector, as the model implicitly selects relevant portions of the sequence, thus establishing context-dependence—an essential element in human comprehension of text and the broader world.

While parallels can be drawn between this process and brain function, some connections may appear tenuous.

Human intelligence stands out due to its versatility across a multitude of tasks, whereas neural network architectures remain largely specialized and struggle with such adaptability. As we advance, emulating human-like attention could emerge as a critical organizational principle within multi-task learning agents, particularly in robotics.

There remains ample opportunity for innovative ideas, such as modeling attention in transformers after attentional processes in human cognition. This underscores the potential benefits of fostering collaboration between neuroscience and machine learning, ensuring both fields continue to inform and enrich one another.